Users, when

forming their own views on different trends, pay great attention on other

users' points of view. Very important in user's view formation is the ratio of

number of users with different opinions. Obviously, there emerge some forces

that are interested in the formation of users' trends and opinions. Such methods of influence are much more

complicated than mere spam. In particular, a whole community with given trend may be

created artificially.

When a user finds himself in such a community, he/she may get a wrong feeling that the trend of this community is being supported by a great number of users and, thus, this trend should be well-reasoned, analyzed and unbiased. Having only selective acquaintance with trends, it is very difficult for a user to detect that the communities, which give rise to these trends, are artificial. Such artificial trends may be created while discussing various political, social, economic, or financial issues.

When a user finds himself in such a community, he/she may get a wrong feeling that the trend of this community is being supported by a great number of users and, thus, this trend should be well-reasoned, analyzed and unbiased. Having only selective acquaintance with trends, it is very difficult for a user to detect that the communities, which give rise to these trends, are artificial. Such artificial trends may be created while discussing various political, social, economic, or financial issues.

One may detect artificial

communities through long-lasting observing of informational streams on given

topic. Based on the analysis of quantitative characteristics of created

communities, one can reveal some anomalies. The communities, created on the

grounds of these anomalies, may be regarded as anomalous and, thus, excluded

from further consideration and informational stream.

In Ukraine

That is why it is interesting and important to analyze social network informational streams concerning events inUkraine

That is why it is interesting and important to analyze social network informational streams concerning events in

Using Twitter API, we have been loading the tweets for several days with such filtering keywords as Ukraine

Users mention other users in their

tweets. They also quote other users by retweeting their messages. It makes possible to

create connections among users and to build a graph, which will demonstrate

users' connections. On such a graph, one may single out different communities

using various existing approaches. One of popular approaches is based on

the modularity notion, which describes the relation of connections between the

vertices inside and outside of the community.

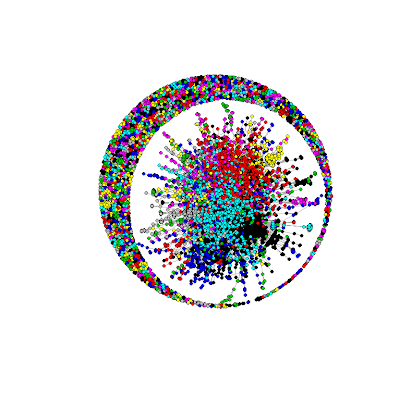

To identify the communities that were formed dynamically in the discussion, we used a fast greedy modularity optimization algorithm. To build a graph, we used a Fruchterman-Reingold algorithm. This algorithm belongs to force algorithms, or spring algorithms. The character of the graph is due to the model which is used in force algorithms. The distinctive feature of the model is that its vertices are considered as the balls, affected by repulsive forces; and the edges are considered as spring models that attract the vertices which are connected by these edges. We have built a network with user communities marked with different colours:

To identify the communities that were formed dynamically in the discussion, we used a fast greedy modularity optimization algorithm. To build a graph, we used a Fruchterman-Reingold algorithm. This algorithm belongs to force algorithms, or spring algorithms. The character of the graph is due to the model which is used in force algorithms. The distinctive feature of the model is that its vertices are considered as the balls, affected by repulsive forces; and the edges are considered as spring models that attract the vertices which are connected by these edges. We have built a network with user communities marked with different colours:

3000 random tweets samples

10000 random tweets samples

50000 random tweets samples

In our analysis, we have detected

several big communities with different adhesion coefficients and different

quantitative characteristics of influencers' activities. The analysis of top

trends in the communities with zero adhesive coefficient showed that their

influencers are anomalous, and it is rather difficult to establish their social

identity. On the other hand, for the big communities with the maximum adhesion

coefficient, the top influencers are well-known Ukrainian and European

politicians and news agencies.

One of conclusions for the study

conducted is the fact that zero or minimum adhesion coefficient points to the

anomality of given community; and high adhesion coefficient indicates that the

community is effective and productive.

Our next step was to remove the

tweets belonging to the users, who were defined as members of of anomalous communities. As a result we

obtained the following graph of users:

10000 random tweets samples (3 anomalous communities were found and removed)

10000 random tweets samples (10 anomalous communities were found and removed)

There is no doubt that the removal

of anomalous communities is very important in data mining of trends, as it

enables to get a real picture of users' minds. One more useful thing in social

networks may be an additional service that would filter the activity of users

from anomalous communities; or it may inform other users about any suspicious

users and informational streams.

In

our further studies we are planning to analyze the other types of anomalous

informational streams, using the theory of formal concept analysis, the theory

of semantic fields, the theory of frequent itemsets and association rules.